Abstract

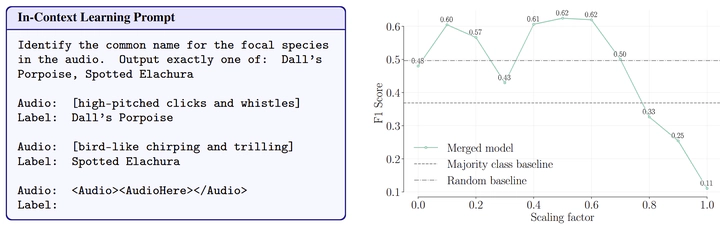

General-purpose foundation models capable of generalizing across species and tasks represent a promising new frontier in bioacoustics, with NATURELM-AUDIO being one of the most prominent examples. While its domain-specific finetuning yields strong performance on bioacoustic benchmarks, we observe that it also introduces trade-offs in instruction-following flexibility. For instance, NATURELM-AUDIO achieves high accuracy when prompted for either the common or scientific name individually, but its accuracy drops significantly when both are requested in a single prompt. These effects limit zero- and few-shot generalization to novel tasks. We address this by applying a simple model merging strategy that interpolates NATURELM-AUDIO with its base language model, recovering instruction-following capabilities with minimal loss of domain expertise. Finally, we show that this enables effective few-shot in-context learning, a key capability for real-world scenarios where labeled data for new species or environments are scarce.