NeXuS

Inter-operable Machine Learning with Universal Representations

Progetto finanziato dal Fondo Italiano per la Scienza (FIS 2), Ministero dell’Università e della Ricerca. - Funded by the Italian Ministry of University and Research (MUR) under the Fondo Italiano per la Scienza (FIS 2) programme.

Machine Learning Models Can Share Knowledge. Let’s Use That Power

Humans would give anything to seamlessly transfer their thoughts and knowledge. Entire civilizations have been built on the slow, painstaking process of passing down information through language, writing, and education. But machine learning (ML) models don’t have this limitation: they encode knowledge in structured representations that, if properly aligned, can be shared, transferred, and repurposed instantly. Yet, today’s AI landscape ignores this potential. Each model operates in isolation, requiring costly retraining and vast amounts of data to adapt to new tasks.

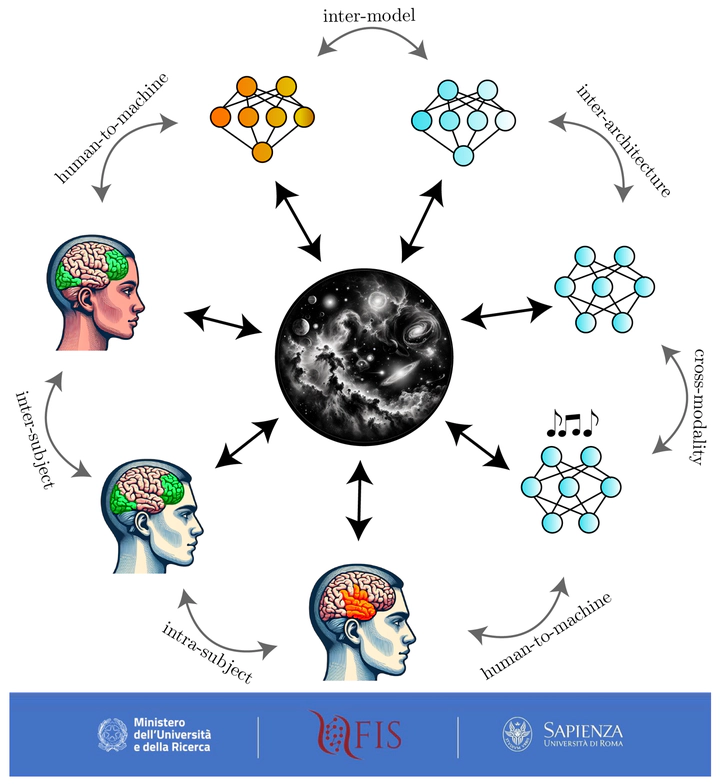

NeXuS challenges this paradigm. Instead of treating AI models as independent silos, we introduce new paradigms and methods for inter-operability, where models collaborate, build on each other’s knowledge, and become reusable building blocks for future innovation.

We’ve leveraged these principles to tackle key challenges in model inter-operability, whether by directly aligning representations, reducing interference in model merging, enabling model stitching in the spectral domain, or driving highly efficient evolutionary merging of LLMs. Yet, this is only the beginning, and there’s far more on the horizon!

Reuse Over Retraining for A More Sustainable AI

With the explosion of pretrained models and fine-tuned variants, most ML applications today do not require new training from scratch. Despite this, the dominant practice remains retraining and finetuning models over and over, wasting compute, energy, and resources.

NeXuS disrupts this inefficient cycle. By enabling models to be reused, merged, and repurposed without additional training, we promote a sustainable and scalable AI ecosystem. Instead of discarding existing knowledge, we embrace model composability, allowing AI systems to evolve and adapt without redundant training.

Shifting from Parameters to Representations

Traditional approaches to model transfer focus on parameters, but parameters are task-specific, modality-dependent, and even tied to random initialization. They do not generalize well across architectures, datasets, or domains.

NeXuS takes a different approach. We shift the focus from parameters to representations, treating the internal feature spaces of neural networks as first-class citizens. By aligning these learned representations across models, NeXuS enables:

- Cross-model communication: Different networks can share knowledge without requiring shared training data or fine-tuning.

- Architecture-agnostic inter-operability: Models with different architectures and training histories can still collaborate.

- Multi-modal synergy: Representations from text, images, audio, 3D data, and even neural signals can be bridged, enabling powerful cross-domain applications.

Expected Outcomes

NeXuS aims to deliver new theoretical frameworks and practical methods for model inter-operability, including representation alignment techniques, training-free model merging strategies, and reusable model components that reduce computational and environmental costs in AI development.

Join Us: Open PhD and Postdoc Positions

We are actively looking for PhD students and postdoctoral researchers eager to work on training-free model editing techniques, model merging, representation alignment, and more. If you are passionate about breaking the silos in AI and contributing to a future where machine learning models collaborate rather than compete, we encourage you to apply.

Our work spans multiple disciplines, including geometric deep learning, neuroscience-inspired AI, modular deep learning, and multi-modal learning, but we are also open to new perspectives and interdisciplinary contributions.

If you are excited by these challenges and want to help redefine how AI models communicate and evolve, get in touch!